November 25, 2025

November 25, 2025

Questions to Ask for a Better Insights Stack: A Framework for Evaluating Research Tools

By

Liz White

%20(1).png)

Studio by Buzzback's Liz White and User Interviews' Ben Wiedmaier shared practical strategies for building a best-in-class research toolstack at Quirks Virtual Global '25. Watch the entire session below.

Table of Contents

- Introduction: Why This Matters Now

- The Current State of Insights Tools

- The Vetting Framework: Three Critical Questions

- Real Stories from Real Leaders

- The ABR Framework: Always Be Researching

- How Studio Builds Best-in-Class Research Tools

- Key Takeaways

- FAQ

Introduction: Why This Matters Now

The research technology landscape has exploded. With over 800 tools featured in recent UX maps and hundreds more in the latest ResTecher landscape, insights professionals face an overwhelming paradox: more options than ever, but less clarity on how to choose the right ones.

At Quirks Virtual Global '25, Liz White, Managing Director of Studio by Buzzback, partnered with Ben Wiedmaier, Research Relations at User Interviews, to present "Questions to Ask for a Better Insights Stack: A Framework for Evaluating Research Tools." The presentation tackled a universal challenge: How do you build a research toolstack that actually delivers on its promises?

The answer isn't about finding the "perfect" tool. It's about developing a rigorous evaluation framework, treating tool selection with the same methodological rigor you apply to your research projects.

The Current State of Insights Tools: A Crowded Landscape

The Numbers Tell the Story

The sheer volume of research technology options is staggering:

- 800+ tools featured in the recent UX research tool map

- Hundreds more in the ResTecher landscape covering full-stack market research solutions

- Four distinct layers of research partners, each serving different needs

The Four Layers of the Research Ecosystem

Understanding where different solutions fit helps you build a more strategic stack:

1. Strategic Agencies

- Best for: Brand positioning, major landscape studies, deeper strategic partnerships

- When to use: High-stakes research requiring extensive expertise and consultation

2. DIY Platforms

- Best for: Quick pulse checks, ongoing tracking

- When to use: When you have internal research expertise and need speed and flexibility

- Trade-off: Requires significant in-house capability

3. Specialized Tools

- Best for: Filling specific workflow gaps

- Examples: Recruiting platforms, analysis tools, transcription services

- When to use: When you need best-in-class capability for a particular research phase

4. Middle Ground Solutions

- Best for: Expert execution without overhead

- Examples: On-demand moderator networks, platforms with expert support

- When to use: When you need quality execution but with more agility than strategic agencies offer

This is where Studio by Buzzback sits, providing expert qualitative research execution through a vetted moderator marketplace, combined with workflow tools that connect to your existing stack rather than replacing it.

The Vetting Framework: Three Critical Questions

As researchers, we should apply the same rigor to tool and partner selection as we do to our research work. Here are three essential questions that separate truly effective solutions from those that look good in demos but fall short in practice.

Question 1: Are They Speaking Your Language?

The Problem: Many platforms prioritize speed and features without understanding research fundamentals.

Red Flags to Watch For:

- Speed without methodology

- Featuring capabilities with quality gaps

- Services that paper over product weaknesses

- Sales reps who can't answer basic methodological questions

What to Look For Instead:

- ✓ Researchers in the room (building and supporting the product)

- ✓ Method guidance baked into the platform

- ✓ Services that enhance the product rather than compensate for it

Real Example from the Field:

"I talked to a CMI Director who told me she sat through a demo where the rep couldn't answer basic questions about respondent profiling and targeting or methodological capabilities. That's not a platform problem, that's a 'we don't actually understand research' problem."

When evaluating tools, pay attention to:

- Can their team speak fluently about research design considerations?

- Do they understand the "why" behind different methodologies?

- Can they advise on best practices, or just show you features?

Question 2: Are They Trying to Be All Things to All People?

The "Flaw-in-One" Problem

Successfully supporting each phase of research—from recruiting to fielding to analysis—requires ongoing resource commitment. "All-in-one" platforms usually mean "meh-in-most."

Why This Matters:

Think about what it takes to excel at just one phase of research:

- Recruiting: Requires constantly refreshed panels, sophisticated matching algorithms, fraud detection systems trained on millions of data points

- Fielding: Needs robust methodology guidance, quality moderators, technical infrastructure for various study types

- Analysis: Demands powerful AI trained on research data, intuitive interfaces, integration with reporting tools

Each of these requires substantial, ongoing investment. Few companies can genuinely excel at all of them simultaneously.

A Better Approach: Build Your Own Best-in-Class Stack

Instead of settling for "good enough" across the board, ask:

- What are your core research jobs?

- Map out your most frequent research needs

- Identify where quality matters most vs. where speed is paramount

- Which tools do those jobs best?

- Look for specialized solutions with deep expertise

- Evaluate based on your specific use cases, not general capabilities

- What share of your budget should be devoted to each job?

- Allocate resources based on research volume and strategic importance

- Don't spread your budget too thin across tools you rarely use

Question 3: What Is Your Evaluation Process?

Adopt Your Own Playbook for Tooling

The most successful research teams apply structured evaluation frameworks to tool selection. One effective approach: Jobs-to-Be-Done Theory

The Jobs-to-Be-Done Evaluation Framework:

Functional Criteria:

- What job are you hiring this tool for?

- Does it do that job better than your current solution?

Emotional Criteria:

- Does this tool increase your confidence in results?

- Will it improve your credibility with stakeholders?

Benefit/Outcome Criteria:

- Will it shift your role from order-taker to strategic partner?

- Can it free up your time or your team's time for higher-value work?

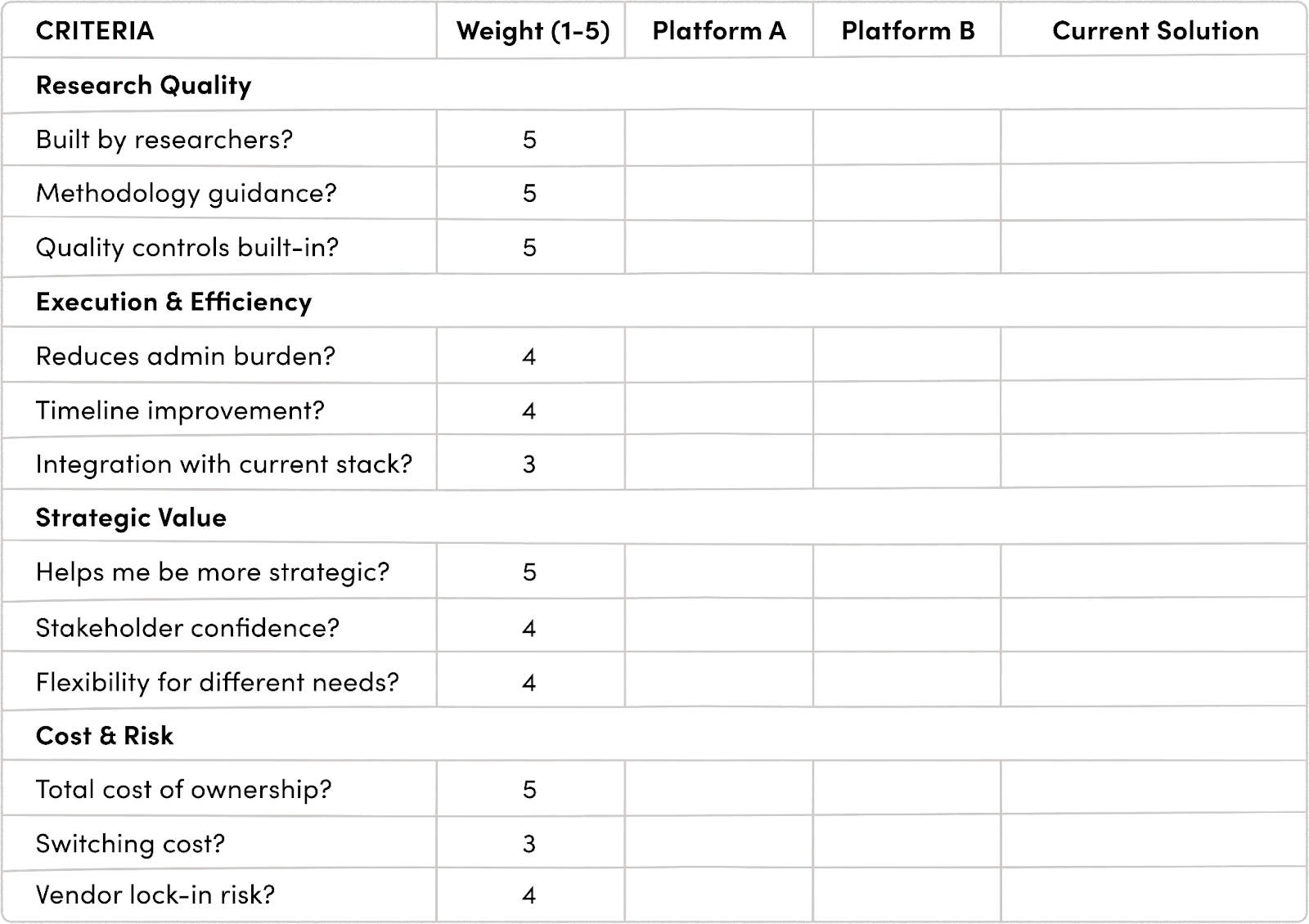

Practical Application: Create a Hiring Scorecard

This scorecard approach forces you to:

- Define what actually matters for your context

- Weight criteria appropriately

- Make evidence-based comparisons

- Document your decision-making process

Get the Tool Evaluation Grid →

Real Stories from Real Leaders

Case Study 1: Calendly's Nimble Approach

The Challenge: Gordon Toon, Research Manager at Calendly, leads a two-person research team supporting diverse stakeholders across a rapidly growing company with a $3B valuation. Each stakeholder group has unique questions and needs.

The Solution: Build Your Own (BYO) Toolstack

Rather than betting on a single all-in-one platform, Calendly created their own constellation of best-in-class tools. This approach delivered:

✓ Agility: Remain nimble to shifting organizational needs without being locked into rigid platform limitations

✓ Quality: Maintain research rigor for stakeholder confidence across different study types

✓ Flexibility: Mix depth and breadth effectively, going deep when strategy demands it, moving fast when speed is critical

The Result: A scrappy two-person team successfully delivers insights at enterprise scale, supporting multiple departments with varying research needs while maintaining quality standards.

Key Lesson: Small teams can punch above their weight by thoughtfully assembling specialized tools rather than settling for the limitations of monolithic platforms.

Case Study 2: Verizon's Integrated Intelligence Engine

The Challenge: Jeff Ulmes, Sr. Director of Customer & Product Insights at Verizon, needed to deliver both speed and depth at massive scale across one of the world's largest telecom companies.

The Solution: Start at the Extremes, Then Fill the Middle

Verizon's approach to building their insights toolstack:

1. Define the Spectrum Endpoints First

- Identified the extremes of their research needs:

- One end: Massive quantitative data requiring predictive analytics

- Other end: Deep qualitative human experiences requiring nuanced understanding

2. Build Integration, Not Isolation

- Tools must work together seamlessly

- Data flows between quantitative and qualitative insights

- Standardization accelerates insight generation, not just execution

3. Scale Both Speed AND Sophistication

- Use the right tool for the right job

- Quick behavioral data when speed matters

- Deep qualitative exploration when understanding "why" is critical

- Predictive models that stay grounded in real human experiences

The Result: An integrated toolkit that extracts predictive intelligence from massive data sets while staying grounded in real human experiences—scaling both speed and sophistication without sacrificing rigor.

Key Lesson: Think systematically about how your tools work together, not just how each performs individually. The whole should be greater than the sum of its parts.

The ABR Framework: Always Be Researching

Here's the core insight: You already have the skills to evaluate research tools, you just need to apply them to your own tool selection process.

You know how to ask questions, test hypotheses, and make evidence-based decisions. The only difference: you're the customer, and platforms are the product.

The Five-Question ABR Framework

1. What job am I hiring this tool to do?

- Be specific about the core function

- Distinguish between "must-have" and "nice-to-have" capabilities

- Example: "I need to recruit 15 B2B decision-makers per week" vs. "I need an end-to-end research platform"

2. What would success look like?

- Define measurable outcomes

- Consider both quantitative metrics (time saved, cost reduction) and qualitative factors (stakeholder satisfaction, research quality)

- Example: "Success means reducing recruitment time from 3 weeks to 3 days while maintaining participant quality standards"

3. What is my hypothesis about improvement/s?

- State your assumption explicitly

- Example: "I hypothesize that using a specialized recruiting platform will reduce our time-to-field by 50% compared to our current DIY approach"

4. How will I test that hypothesis?

- Design a proper test (pilot program, phased rollout, A/B comparison)

- Determine what data you'll collect

- Set a timeline for evaluation

- Example: "We'll run our next three studies through the new platform while tracking recruitment time, participant quality scores, and moderator satisfaction ratings"

5. What could prove me wrong?

- Identify potential failure modes

- Define your threshold for "not working"

- Plan how you'll course-correct if needed

- Example: "If participant no-show rates exceed 20% or if total costs increase by more than 15%, we'll reassess"

Apply This to Your Current Tool Decisions

Pick one tool you're currently evaluating and walk through these five questions. You'll likely uncover:

- Gaps in your current evaluation process

- Assumptions you haven't tested

- Criteria you've overlooked

- A clearer path to making a confident decision

Remember: Success isn't finding a "perfect" stack. It's building the evaluative muscle to continuously improve your toolkit.

How Studio Builds Best-in-Class Research Tools

At Studio by Buzzback, we've taken these principles to heart in how we've built our platform. We don't try to be everything to everyone, instead, we focus obsessively on what we do best: orchestrating expert qualitative research execution.

Here's How We Lean In and Build Best-in-Class Tools

1. Built by Researchers, for Researchers

Our platform wasn't designed by engineers trying to imagine what researchers need, it was built by practitioners who've conducted thousands of qualitative studies and know the pain points intimately.

- Every feature solves a real problem we've encountered in actual research projects

- Our team includes seasoned moderators, research directors, and insights leaders

- We beta-test with real clients on real studies, not hypothetical use cases

2. A Vetted Moderator Marketplace, Not a "Find Anyone" Platform

Quality qualitative research lives or dies on moderator skill. That's why Studio operates as a curated marketplace:

- Rigorous vetting process: Every moderator in our network has been evaluated for methodology expertise, client communication, and execution quality

- Specialized matching: We help you find moderators with specific industry experience or methodological expertise, not just "anyone available"

- Ongoing quality assurance: Moderators are rated after each project, and we continuously refine our network based on performance

This is fundamentally different from DIY platforms that simply connect you with whoever signs up. We take responsibility for quality because we understand that your research is only as good as the person conducting it.

3. Workflow Tools That Connect, Not Replace

We learned from the leaders we interviewed: the best toolstacks are assembled from specialized solutions, not forced into all-in-one platforms. That's why Studio is built to work with your existing stack:

- Integrations with leading platforms: Connect with the recruiting, analysis, and storage tools you already use

- Flexible solutions that scale: Go full service when you need turnkey execution, or use just the pieces you need for specific projects

- No lock-in: Our platform enhances your workflow without forcing you to abandon tools that work

4. Partner Integrations That Extend Capability

Rather than trying to build everything ourselves, we integrate with best-in-class partners:

- Recruiting partners like User Interviews when you need specialized participant sourcing

- Analysis platforms to streamline your post-fielding workflow

- Collaboration tools that your team already uses

This approach lets us focus our product development resources on what we do uniquely well, moderator matching and project orchestration all while ensuring you can access best-in-class capabilities for every phase of research.

5. Services That Enhance Product, Not Paper Over Gaps

Some platforms bolt on services to compensate for product weaknesses. We do the opposite, our services extend and enhance a strong product:

- Research consultation: Our team helps you design better studies, not just execute what you hand us

- Methodology guidance: Built into the platform at decision points, plus available on-demand from our team

- Quality oversight: Every project has experienced oversight ensuring your research meets high standards

The Result: Expert Execution Without the Overhead

This is what "middle ground" excellence looks like:

- ✓ The quality and expertise of working with a strategic agency

- ✓ The flexibility and efficiency of a DIY platform

- ✓ The specialized depth of focused tools

- ✓ A cost structure that makes sense for ongoing research programs

We're not trying to be your only research tool, we're trying to be the best tool for what we do, and to integrate seamlessly with the other specialized solutions you use.

Ready to See Studio in Action?

If you're evaluating your qualitative research stack and looking for expert moderator support combined with flexible workflows, let's talk.

See how Studio's vetted moderator marketplace and research orchestration platform can help you deliver higher-quality insights without the agency overhead.

Key Takeaways: Building Your Better Insights Stack

The Core Principles

- Apply research rigor to your tool selection

- Treat vendor evaluation like a research project

- Test hypotheses, gather evidence, make data-driven decisions

- Beware the "all-in-one" trap

- Build your own best-in-class stack from specialized tools

- Focus on how tools work together, not just individual capabilities

- Demand that vendors speak your language

- Look for platforms built by researchers who understand methodology

- Services should enhance products, not compensate for weaknesses

- Start with jobs-to-be-done

- Define what you're hiring each tool to accomplish

- Weight functional, emotional, and outcome-based criteria

- Create scorecards for systematic evaluation

- Always be researching (ABR)

- Continuously evaluate your stack's performance

- Be willing to swap tools when better options emerge

- Build the muscle for ongoing optimization

The Questions That Matter

Before buying:

- What job am I hiring this tool for?

- Does it do that job better than alternatives?

- How will I measure success?

- What could prove me wrong?

During evaluation:

- Are they speaking my language?

- Is quality built into the product or added through services?

- How does this integrate with my existing stack?

- What's the total cost of ownership, including switching costs?

After implementation:

- Is it delivering on the hypothesized improvements?

- What unexpected benefits or challenges have emerged?

- Should I expand use, maintain current level, or consider alternatives?

Your Next Steps

- Audit your current stack

- Map your research jobs to the tools you're using

- Identify gaps, overlaps, and underperforming solutions

- Create your evaluation framework

- Adapt the jobs-to-be-done scorecard to your context

- Define weights based on your team's priorities

- Document your criteria for future decisions

- Test incrementally

- Start with pilot programs, not wholesale replacements

- Gather data systematically during trials

- Be willing to course-correct based on evidence

- Build evaluation into your workflow

- Make tool assessment an ongoing practice, not a one-time event

- Share learnings across your team

- Stay current on new solutions without chasing every shiny object

Frequently Asked Questions

Q: Should I consolidate tools to save money?

A: Not necessarily. Consolidation for its own sake often leads to the "flaw-in-one" problem. Focus first on whether each tool does its specific job well and integrates with your stack. Sometimes paying for three specialized tools delivers better ROI than one "do-everything" platform that's mediocre at most functions.

Q: How do I convince leadership to invest in multiple tools instead of one platform?

A: Use the jobs-to-be-done framework to show the business case:

- Demonstrate the specific improvements each tool delivers

- Calculate total cost of ownership including the value of time saved and quality improvements

- Show how specialization reduces risk (if one tool fails, you haven't lost your entire capability)

- Use the Calendly and Verizon examples to illustrate how successful teams take this approach

Q: What if my team doesn't have the expertise to use DIY platforms?

A: That's a signal that middle-ground solutions like Studio might be your best bet—you get expert execution without needing to build internal research chops first. As your team's skills grow, you can adjust your stack. There's no shame in buying expertise when you need it.

Q: How often should I reevaluate my toolstack?

A: For actively used tools, conduct a formal review annually. For tools you're just testing, evaluate after 3-5 projects. But practice continuous informal evaluation, if a tool consistently frustrates you or underdelivers, don't wait for the formal review cycle to consider alternatives.

Q: What's the biggest mistake teams make when building their stack?

A: Choosing based on demos rather than actual use. Platforms can look impressive in carefully orchestrated demonstrations, but the true test is how they perform in your real workflow with your actual projects. Always insist on hands-on trials before committing.

Q: How do I balance standardization with flexibility?

A: Standardize where consistency matters most (usually recruiting quality, data security, methodological rigor). Stay flexible where needs vary significantly across projects (analysis approaches, reporting formats, stakeholder collaboration). The Verizon example shows how standardization can actually accelerate insight generation when done thoughtfully.

Q: Should I build or buy research tools?

A: Buy unless you have very unique needs and significant development resources. The research tool landscape is mature enough that specialized solutions almost certainly exist for your use cases. Building custom tools pulls resources away from actual research and creates technical debt. That said, integrating purchased tools often requires light custom work, that's a good investment.

Q: What if I'm locked into a contract with a tool that's not working?

A: First, document specifically what's not working and whether it's fixable (maybe you need training or different use cases). If it's genuinely the wrong tool, calculate the cost of continuing vs. the cost of switching, including your team's time. Sometimes paying to exit a bad contract is worth it. Use this experience to inform better evaluation processes for next time.

Q: How do I keep up with new tools without getting distracted by shiny objects?

A: Create a lightweight monitoring system:

- Follow 2-3 industry newsletters or communities

- Attend one landscape overview session per quarter

- Maintain a "tools to watch" list you review semi-annually

- Only deep-dive when you have a specific job that's not being done well by your current stack

The key is staying informed without constantly churning your stack.

Watch the Full Presentation

Want to dive deeper into the frameworks, see the real-world examples in action, and hear directly from Liz White and Ben Wiedmaier?

Watch the Complete Quirks Virtual Global Recording →

The full session includes:

- Detailed walkthrough of the evaluation scorecard

- Extended case studies from Calendly and Verizon

- Live Q&A with audience questions

- Additional examples of research tool ecosystems

- Downloadable framework templates

About the Presenters:

Liz White is Managing Director at Studio by Buzzback, where she leads the qualitative research marketplace connecting brands with vetted expert moderators. With over a decade of research experience spanning strategic agencies and in-house roles, Liz has advised dozens of insights teams on building effective research operations.

Ben Wiedmaier is Research Relations at User Interviews, a participant recruitment platform that has facilitated over 200,000 research sessions. Ben works with insights teams to optimize their recruiting strategies and improve participant quality.

Ready to build a better insights stack? Start by evaluating where you are today. Download our Jobs-to-Be-Done Evaluation Scorecard and begin mapping your research needs to your current tools.

Subscribe

Stay up to date on industry insights and resources from Studio.